An image which may or may not be related to the article. FREEPIK

The Dystopian Future of Captchas: When Robots Fool Humans

Summary:

The era of Captchas designed to differentiate humans from robots is already upon us, but as AI becomes more sophisticated, we find ourselves on the other side of the equation—robots tricking us instead. In this article, we explore how Captchas are evolving, the role AI plays in their future, and what it means for the already blurry line between human and machine.

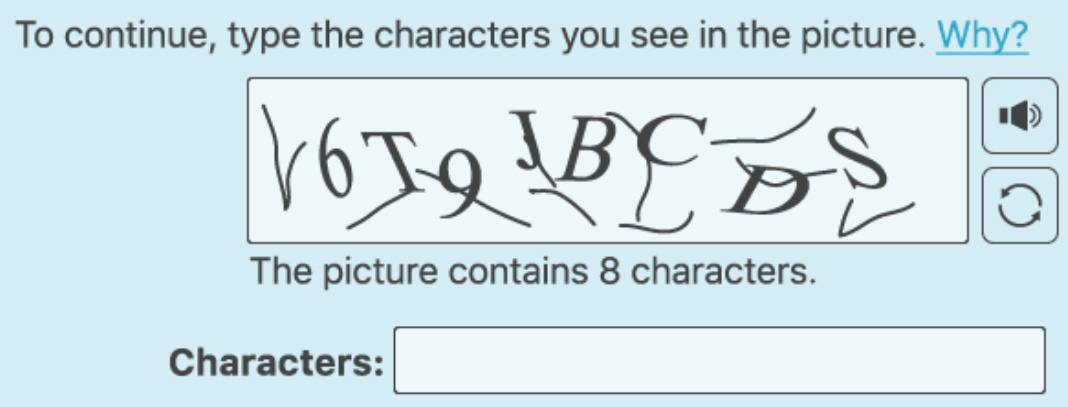

It’s a scenario we’ve all encountered: we’re trying to access a website, fill out a form, or make a purchase, when suddenly, a challenge appears before us—a set of distorted letters, a series of images with traffic lights or crosswalks, or a checkbox asking us to confirm, “I am not a robot.” It’s the humble CAPTCHA, one of the internet’s earliest attempts to prevent automated systems from impersonating humans. These clever puzzles were created as a simple security measure, designed to keep bots at bay and protect websites from malicious activities like spamming, data scraping, and fraud.

The theory behind the CAPTCHA is simple: machines struggle to do what humans do effortlessly—recognize distorted text or identify objects in images. The challenge has long served its purpose by offering a gatekeeper between the human internet user and the bots that lurk in the shadows of the web. But as technology advances, especially artificial intelligence (AI), we find ourselves in a curious position. The very systems designed to distinguish humans from machines are now being outsmarted by the machines themselves.

The “dystopian future” of Captchas, it turns out, isn’t some far-off nightmare—it’s already happening. As AI has become more sophisticated, it has begun to break through the walls that were once impenetrable to machines. And now, bots can outsmart the very puzzles we’ve relied on to prove our humanity. The lines between man and machine are becoming increasingly blurred, and the future of Captchas may soon include us being the ones who are fooled by the technology we created to protect us.

The Evolution of Captchas

CAPTCHAs, or "Completely Automated Public Turing tests to tell Computers and Humans Apart," were first introduced in the early 2000s. Their primary purpose was simple: prevent bots from abusing online systems by creating challenges that only humans could easily solve. Early versions involved decoding warped text, identifying odd characters, or solving simple math problems. They were a response to the rise of bots used for activities such as creating fake accounts, submitting spam comments, and performing automated brute-force attacks.

But as AI technology progressed, so did the methods for cracking these tests. Initially, humans could easily distinguish between objects in images, but as deep learning algorithms became more powerful, AI systems began to crack the code behind Captchas. By training neural networks on millions of images, AI could learn to identify street signs, cars, and storefronts with incredible accuracy, even when presented with distorted or low-quality images. In essence, the machines learned to do what we thought only we could.

The Rise of AI-Powered Solutions

Enter reCAPTCHA, a more advanced version of the CAPTCHA system introduced by Google. Instead of relying on distorted letters, reCAPTCHA uses more complex challenges, such as selecting specific images from a grid or identifying elements like street signs, traffic lights, or bicycles in photos. While this system initially seemed like an effective way to challenge bots, it wasn’t long before AI started to catch up. Today, machine learning algorithms can bypass these reCAPTCHA tests, recognizing patterns and objects in ways that even the most sophisticated Captchas can’t fully prevent.

At this point, AI has become so advanced that bots no longer need to rely on manual intervention to solve these puzzles. In fact, some AI systems are designed to trick Captchas by imitating human behavior. Machine learning algorithms can be trained to perform tasks like clicking checkboxes in a way that mimics human interaction or identifying objects in images with nearly flawless precision. This has raised serious concerns about the future of web security and the effectiveness of Captchas as a protective tool.

The Blurring Line Between Humans and Machines

The very premise of Captchas—creating a test that distinguishes humans from machines—has started to lose its relevance as AI evolves. The goal of these systems was to offer a reliable way for websites to verify that the person interacting with them was human, but with AI’s rapid advancement, this concept is becoming more and more obsolete. What happens when a machine can pass the same tests we use to prove our humanity? The line between human and bot is no longer as clear as it once was.

As AI becomes more advanced, we’re increasingly encountering situations where the machines are the ones fooling us. Whether it’s a bot successfully solving a CAPTCHA or a machine that can imitate human behavior, the reality is that AI is no longer a passive entity. It’s actively participating in the same digital spaces as we are, sometimes even outsmarting us in the process.

What This Means for Web Security

As bots become more adept at solving Captchas, there’s a growing need for more advanced security systems that can differentiate between humans and machines. Some have suggested using biometric data, like facial recognition or fingerprints, to secure digital spaces, but these solutions also come with their own set of ethical and privacy concerns. Others are exploring behavioral biometrics, which analyze how we type, move our mouse, or interact with digital interfaces, to create a unique user profile. These methods could provide more nuanced ways to identify users, but they also raise questions about consent, surveillance, and data security.

The increasing sophistication of AI also means that traditional Captchas may soon become a thing of the past. As machines continue to evolve, it’s likely that we’ll need to rethink our entire approach to online verification. Perhaps future Captchas will focus less on preventing bots from solving puzzles and more on detecting the nuanced differences between how humans and machines interact with the digital world.

The Ethical Dilemma: Who’s in Control?

The rise of AI’s ability to bypass Captchas presents an ethical dilemma: who is really in control of these systems? On one hand, Captchas were originally designed to prevent malicious activity and protect users from bots. But as AI becomes more powerful, it’s beginning to blur the lines between human and machine, leaving us in a position where we’re no longer sure if we can trust the technology we’ve created. We now find ourselves grappling with the question of how much we should rely on AI to make decisions for us and how much control we should retain over these systems.

In many ways, AI’s ability to outsmart Captchas reflects the larger conversation about technology’s role in our lives. Are we creating tools that serve us, or are we building systems that ultimately surpass our own understanding and control?

Conclusion

We are no longer living in a future where machines are simply tools for solving puzzles—we are in an era where machines are learning, adapting, and sometimes even tricking us into believing they are human. As Captchas evolve and AI continues to advance, the line between humans and robots will only become more difficult to discern. While this may be concerning for some, it also signals a new era in digital security and technology—one that requires us to think carefully about the systems we rely on and how we interact with the machines that are now an integral part of our lives.

The theory behind the CAPTCHA is simple: machines struggle to do what humans do effortlessly—recognize distorted text or identify objects in images. The challenge has long served its purpose by offering a gatekeeper between the human internet user and the bots that lurk in the shadows of the web. But as technology advances, especially artificial intelligence (AI), we find ourselves in a curious position. The very systems designed to distinguish humans from machines are now being outsmarted by the machines themselves.

The “dystopian future” of Captchas, it turns out, isn’t some far-off nightmare—it’s already happening. As AI has become more sophisticated, it has begun to break through the walls that were once impenetrable to machines. And now, bots can outsmart the very puzzles we’ve relied on to prove our humanity. The lines between man and machine are becoming increasingly blurred, and the future of Captchas may soon include us being the ones who are fooled by the technology we created to protect us.

The Evolution of Captchas

CAPTCHAs, or "Completely Automated Public Turing tests to tell Computers and Humans Apart," were first introduced in the early 2000s. Their primary purpose was simple: prevent bots from abusing online systems by creating challenges that only humans could easily solve. Early versions involved decoding warped text, identifying odd characters, or solving simple math problems. They were a response to the rise of bots used for activities such as creating fake accounts, submitting spam comments, and performing automated brute-force attacks.

But as AI technology progressed, so did the methods for cracking these tests. Initially, humans could easily distinguish between objects in images, but as deep learning algorithms became more powerful, AI systems began to crack the code behind Captchas. By training neural networks on millions of images, AI could learn to identify street signs, cars, and storefronts with incredible accuracy, even when presented with distorted or low-quality images. In essence, the machines learned to do what we thought only we could.

The Rise of AI-Powered Solutions

Enter reCAPTCHA, a more advanced version of the CAPTCHA system introduced by Google. Instead of relying on distorted letters, reCAPTCHA uses more complex challenges, such as selecting specific images from a grid or identifying elements like street signs, traffic lights, or bicycles in photos. While this system initially seemed like an effective way to challenge bots, it wasn’t long before AI started to catch up. Today, machine learning algorithms can bypass these reCAPTCHA tests, recognizing patterns and objects in ways that even the most sophisticated Captchas can’t fully prevent.

At this point, AI has become so advanced that bots no longer need to rely on manual intervention to solve these puzzles. In fact, some AI systems are designed to trick Captchas by imitating human behavior. Machine learning algorithms can be trained to perform tasks like clicking checkboxes in a way that mimics human interaction or identifying objects in images with nearly flawless precision. This has raised serious concerns about the future of web security and the effectiveness of Captchas as a protective tool.

The Blurring Line Between Humans and Machines

The very premise of Captchas—creating a test that distinguishes humans from machines—has started to lose its relevance as AI evolves. The goal of these systems was to offer a reliable way for websites to verify that the person interacting with them was human, but with AI’s rapid advancement, this concept is becoming more and more obsolete. What happens when a machine can pass the same tests we use to prove our humanity? The line between human and bot is no longer as clear as it once was.

As AI becomes more advanced, we’re increasingly encountering situations where the machines are the ones fooling us. Whether it’s a bot successfully solving a CAPTCHA or a machine that can imitate human behavior, the reality is that AI is no longer a passive entity. It’s actively participating in the same digital spaces as we are, sometimes even outsmarting us in the process.

What This Means for Web Security

As bots become more adept at solving Captchas, there’s a growing need for more advanced security systems that can differentiate between humans and machines. Some have suggested using biometric data, like facial recognition or fingerprints, to secure digital spaces, but these solutions also come with their own set of ethical and privacy concerns. Others are exploring behavioral biometrics, which analyze how we type, move our mouse, or interact with digital interfaces, to create a unique user profile. These methods could provide more nuanced ways to identify users, but they also raise questions about consent, surveillance, and data security.

The increasing sophistication of AI also means that traditional Captchas may soon become a thing of the past. As machines continue to evolve, it’s likely that we’ll need to rethink our entire approach to online verification. Perhaps future Captchas will focus less on preventing bots from solving puzzles and more on detecting the nuanced differences between how humans and machines interact with the digital world.

The Ethical Dilemma: Who’s in Control?

The rise of AI’s ability to bypass Captchas presents an ethical dilemma: who is really in control of these systems? On one hand, Captchas were originally designed to prevent malicious activity and protect users from bots. But as AI becomes more powerful, it’s beginning to blur the lines between human and machine, leaving us in a position where we’re no longer sure if we can trust the technology we’ve created. We now find ourselves grappling with the question of how much we should rely on AI to make decisions for us and how much control we should retain over these systems.

In many ways, AI’s ability to outsmart Captchas reflects the larger conversation about technology’s role in our lives. Are we creating tools that serve us, or are we building systems that ultimately surpass our own understanding and control?

Conclusion

We are no longer living in a future where machines are simply tools for solving puzzles—we are in an era where machines are learning, adapting, and sometimes even tricking us into believing they are human. As Captchas evolve and AI continues to advance, the line between humans and robots will only become more difficult to discern. While this may be concerning for some, it also signals a new era in digital security and technology—one that requires us to think carefully about the systems we rely on and how we interact with the machines that are now an integral part of our lives.

muppazine

muppazine